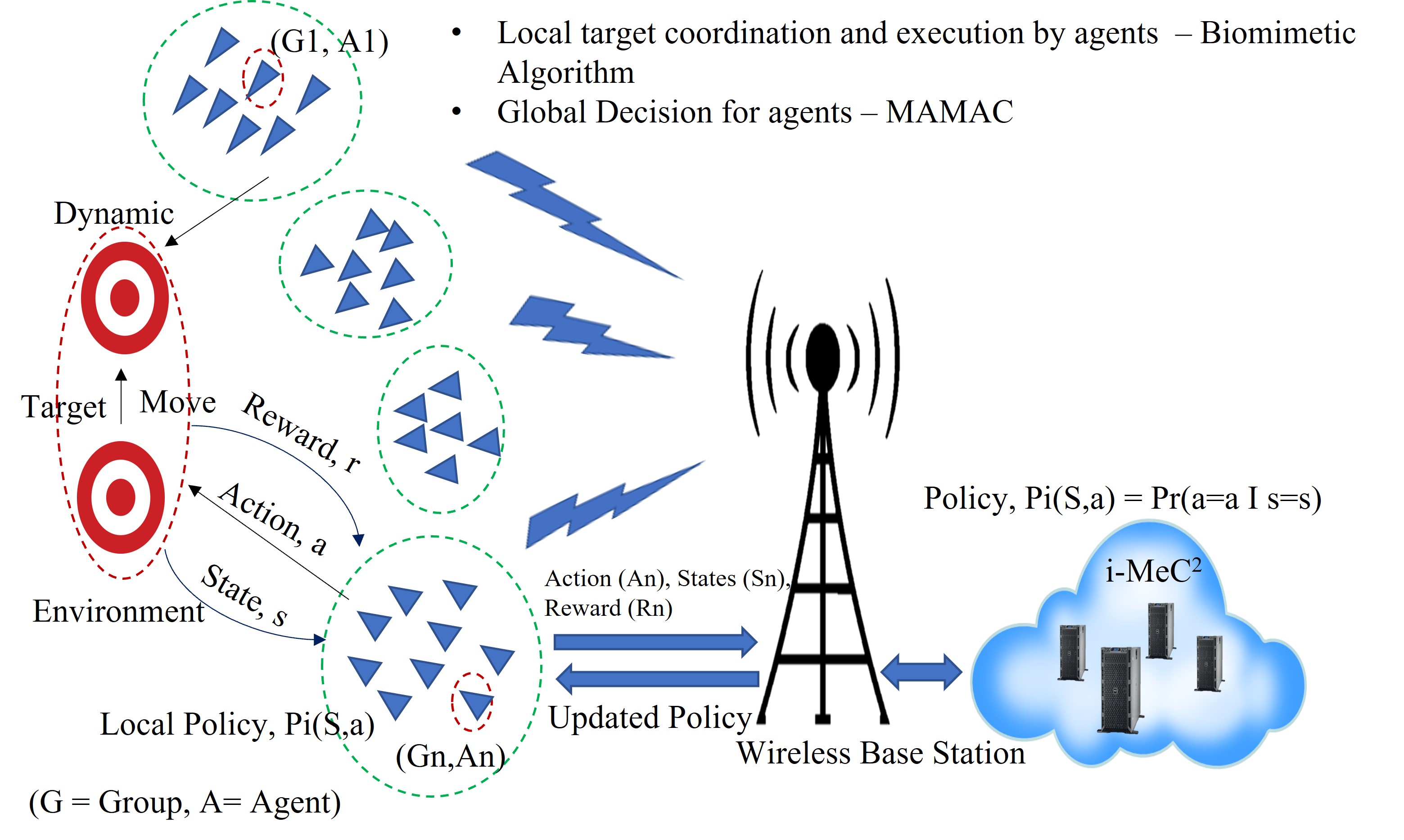

We work on challenging open problems at the intersection of deep learning, Reinforcement learning, and robotics. We develop algorithms and systems that unify bio-inspired AI concepts for low-level control and reinforcement learning to teach ground and aerial robots to perceive and interact with the physical world. The robots are utilized to do the collaborative task for disaster tolerance in Urban Smart Cities (USC).